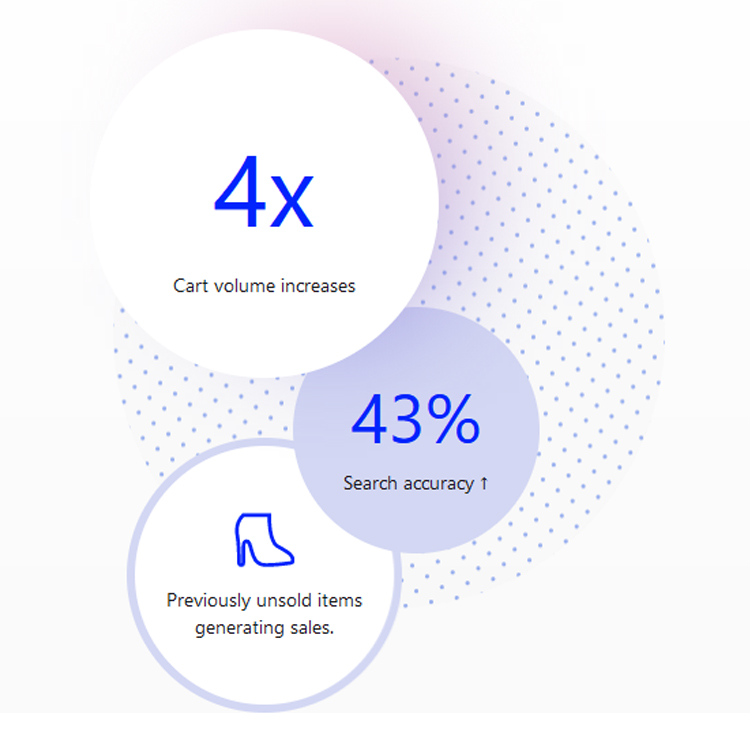

No more excuses to avoid product discovery. A single source of truth for your user feedback.

Powered by AI.

// Tool

// Type

// Category

Video Generator

// Description

Machine learning doesn’t need to be so hard. Run models in the cloud at scale.

// Overview

Replicate lets you run machine learning models with a few lines of code, without needing to understand how machine learning works.

Thousands of models, ready to use

Machine learning can do some extraordinary things. Replicate’s community of machine learning hackers have shared thousands of models that you can run.

Language models

Models that can understand and generate text.

Super resolution

Upscaling models that create high-quality images from low-quality images.

Image to text

Image and video generation models trained with diffusion processes.

Video creation and editing

Models that create and edit videos.

Image restoration

Image and video generation models trained with diffusion processes.

Text to image

Image and video generation models trained with diffusion processes.

Imagine what you can build

With Replicate and tools like Next.js and Vercel, you can wake up with an idea and watch it hit the front page of Hacker News by the time you go to bed.

Here are a few of our favorite projects built on Replicate. They’re all open-source, so you can use them as a starting point for your own projects.

Push

You’re building new products with machine learning. You don’t have time to fight Python dependency hell, get mired in GPU configuration, or cobble together a Dockerfile.

That’s why we built Cog, an open-source tool that lets you package machine learning models in a standard, production-ready container.

First, define the environment your model runs in with cog.yaml:

Next, define how predictions are run on your model with predict.py:

Now, you can run predictions on this model locally:

Finally, push your model to Replicate, and you can run it in the cloud with a few lines of code:

Scale

Deploying machine learning models at scale is horrible. If you’ve tried, you know. API servers, weird dependencies, enormous model weights, CUDA, GPUs, batching. If you’re building a product fast, you don’t want to be dealing with this stuff.

Replicate makes it easy to deploy machine learning models. You can use open-source models off the shelf, or you can deploy your own custom, private models at scale.

Automatic API

Define your model with Cog, and we’ll automatically generate a scalable API server for it with standard practices and deploy on a big cluster of GPUs.

Automatic scale

If you get a ton of traffic, Replicate scales up automatically to handle the demand. If you don’t get any traffic, we scale down to zero and don’t charge you a thing.

Pay by the second

Replicate only bills you for how long your code is running. You don’t pay for expensive GPUs when you’re not using them.

Ready to discuss your project?

Before they sold out literally live-edge lyft mumblecore forage vegan bitters helvetica.