No more excuses to avoid product discovery. A single source of truth for your user feedback.

Powered by AI.

// Tool

CSM.ai generate 3D models and worlds from text, images, or sketches

// Type

3D Modeling Tool

// Category

Developer Tools

// Examples

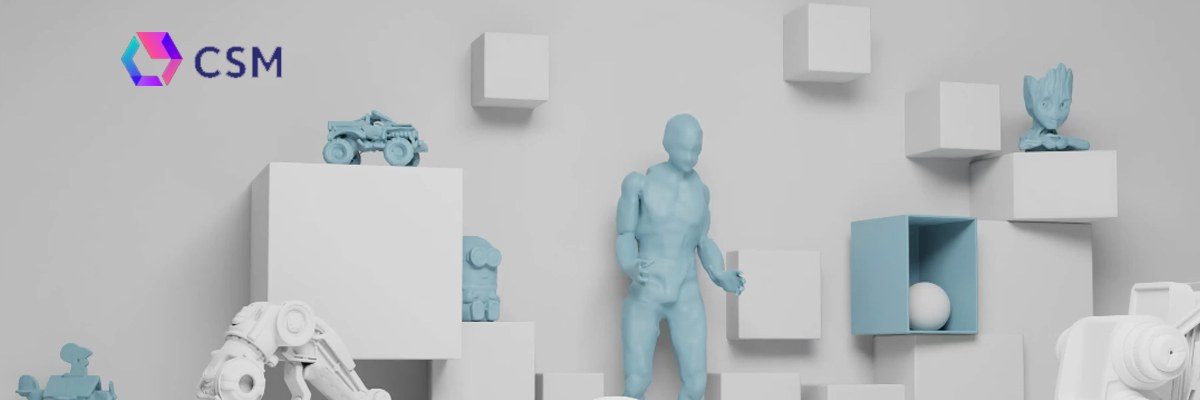

CSM.ai is an innovative tool for creating 3D models and virtual worlds from simple inputs like images, text, or sketches. It’s perfect for game development, VR, AR, architecture, and product prototyping, offering customizable, game-engine-ready assets. Whether you’re an artist or developer, this tool accelerates 3D creation.

// URL

// Tags

#3DModeling #AI3D #GameDevelopment #VR #AR #Simulation #ProductPrototyping

// Description

3d worlds from any input

MULTI-MODAL 3D GENERATION

// Overview

Create 3D assets from video, image or text.

Generate assets with high-resolution geometry, UV-unwrapped textures and neural radiance fields – using latest breakthroughs in neural inverse graphics.

Now creating environments and games is faster and more accurate than ever before.

Want to integrate CSM capabilities in your own app or platform? Use our APIs(coming soon).

CommonSim-1: Generating 3D Worlds

A longstanding dream in generative AI has been to digitally synthesize dynamic 3D environments – interactive simulations consisting of objects, spaces, and agents. Simulations enable us to create synthetic worlds and data for robotics and perception, new content for games and metaverses, and adaptive digital twins of everyday and industrial objects and spaces. Simulators are also the key to imaginative machines endowed with a world model; they allow them to visualize, predict, and consider possibilities before taking action. But today’s simulators are too hard to build and limited for our needs. Generative AI will change this.

Generative AI requires compressing diverse human experiences and cultures into a large-scale model. Indeed, recent large generative models (such as Dall-E 2, StableDiffusion, Imagen, Make-A-Scene, and many others) have shown that this dream may be possible today. A natural evolution in this progression is for AI systems to create a new class of simulators that learn to generate 3D models, long-horizon videos, and efficient actions. These feats are now becoming attainable due to recent advances in neural rendering, diffusion models, and attention architectures.

Common Sense Machines has built a neural simulation engine we call CommonSim-1. Instead of laboriously creating content and spending weeks of effort navigating complex tools, users and robots interact with CommonSim-1 via natural images, actions, and text. These interfaces will enable us to rapidly create digital replicas of real-world scenarios or make imagining entirely new ones as natural as taking a photo or describing a scene in the text. Today, we are previewing these model capabilities. We are excited to see what developers, creators, researchers, and more are able to create! Sign up below for early access to our application, APIs, checkpoints, and code.

Ready to discuss your project?

Before they sold out literally live-edge lyft mumblecore forage vegan bitters helvetica.